Sip n’ Sense

A multisensory straw that helps people track hydration through sight, sound, and touch

We designed and tested a prototype straw that provides visual, auditory, and haptic cues to help people monitor hydration more accurately. The project explored how sensory feedback influences engagement and accessibility in health-related interfaces.

This project was part of a class called Multisensory Design, where we explored how to take a multisensory approach to design that makes interfaces more accessible to disabled and nondisabled users.

Role: App Designer, Hardware Developer (Arduino & Sensor Integration)

Timeline: 7 Weeks (Sep - Oct 2025)

Team: Journey Brown-Saintel, Sneha Yalavarti

Award: NYU Prototyping Fund

Our Process

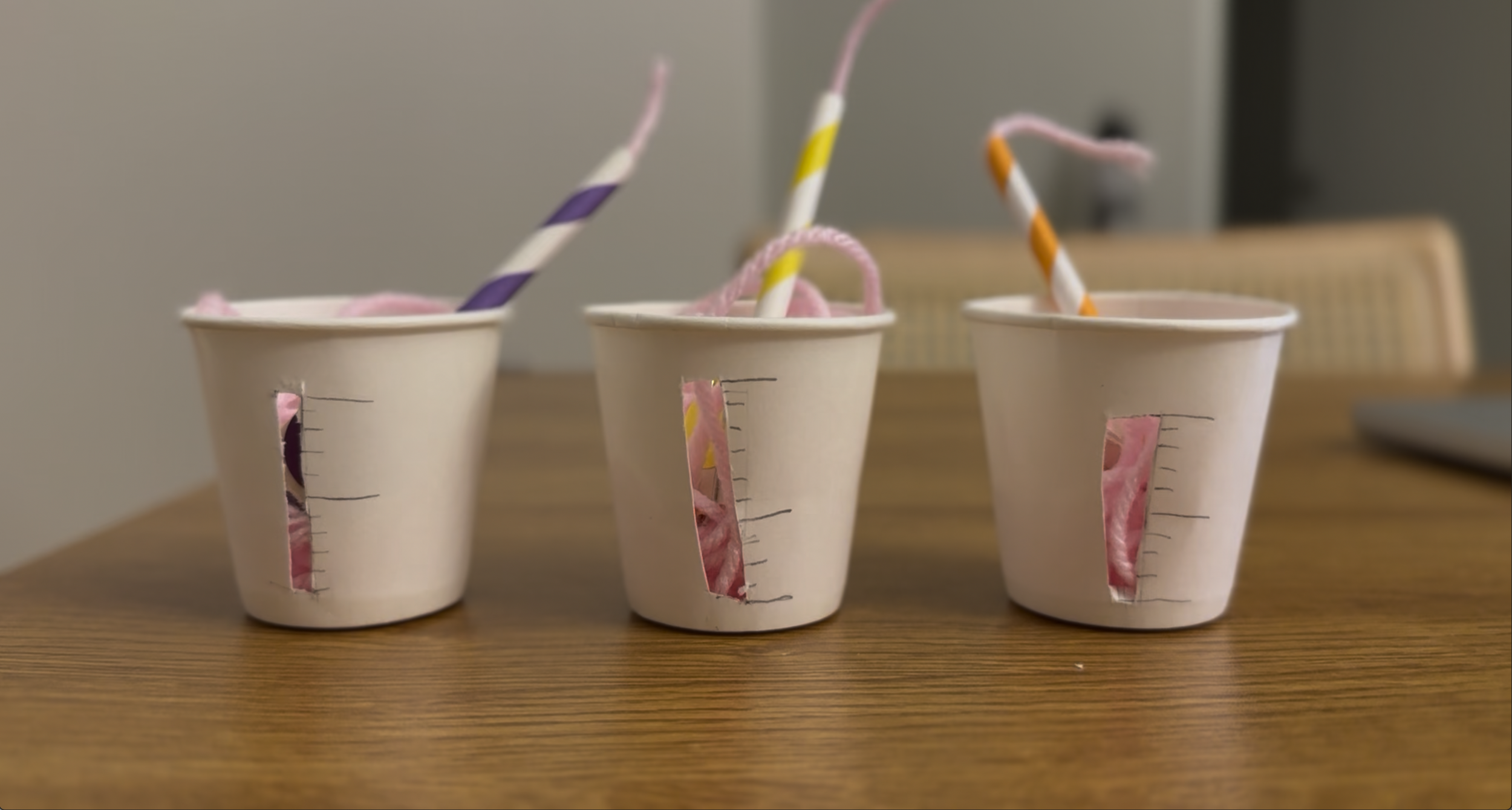

During our initial prototyping phase, we explored which sensory output was most intuitive. We rapidly prototyped a basic system: a small cup and straw designed to indicate 2oz increments

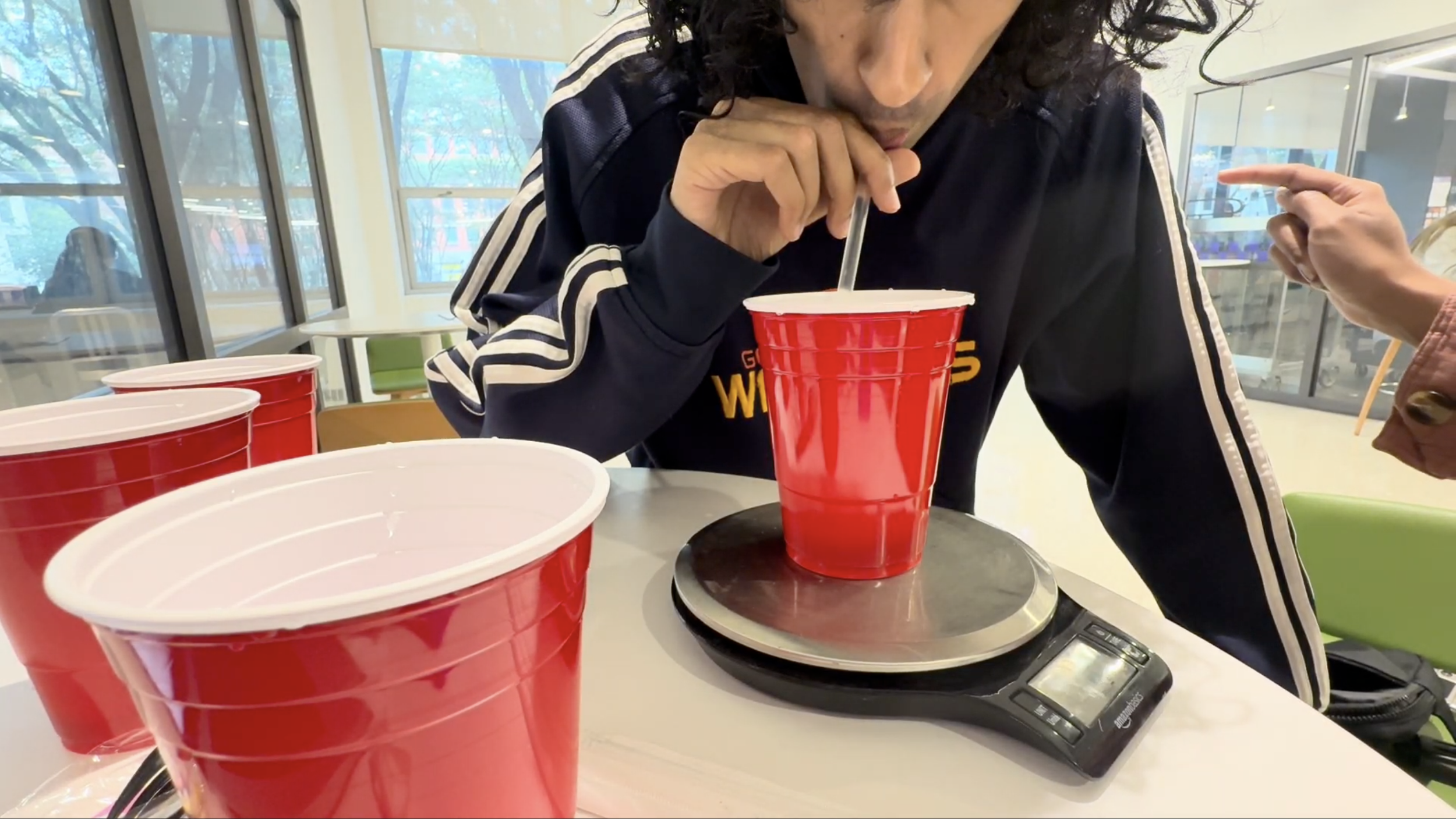

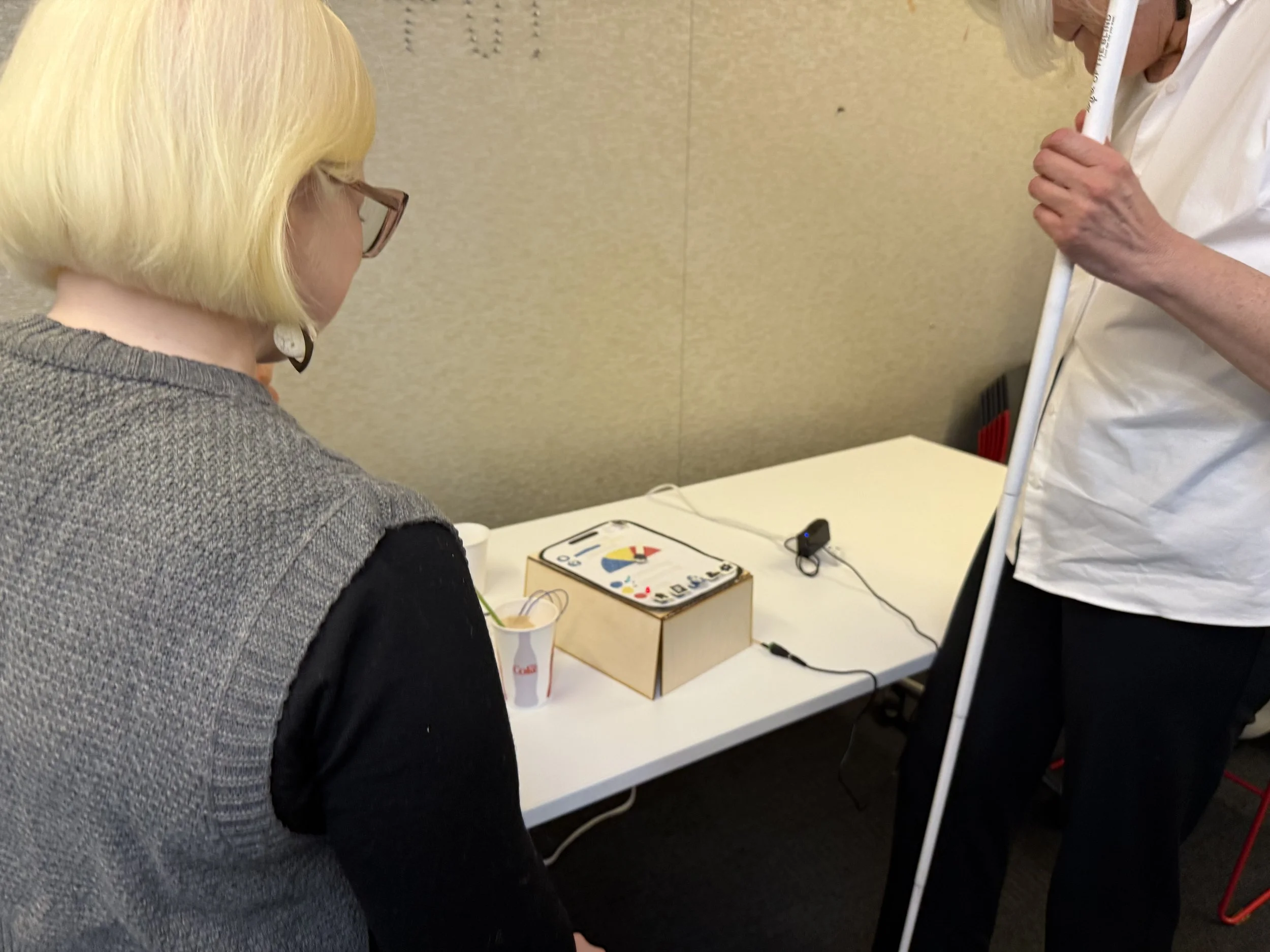

This initial prototype was about testing user intuition. This scene shows our usability testing with participants. They were asked to drink what they thought was 2oz of water, while we provided three different feedback modalities in each round.

Each test showed how quickly people adapt when information comes through senses outside of sight.

Video Description: Showing the sensor calibration process for the prototype. As the Arduino code runs, the water-level sensor detects changes in the cup and triggers corresponding LED and servo movements.

We secured the NYU Prototyping Grant, which allowed us to purchase Arduino water sensors.

We moved into physical computing, spending time experimenting with sensor placement and writing the specific Arduino code necessary to translate raw sensor data into accurate water level measurements. The funding turned our proof-of-concept into a truly robust solution.

Video Description: Assembling the Sip n’ Sense prototype. Wiring LEDs, adding Braille labels, and creating tactile color guides for the water-level gauge.

In the final phase, we focused on holistic design. We created a large paper prototype for the accompanying responsive mobile app, demonstrating how the data could be tracked digitally.

We added raised tactile textures and Braille so users with low vision could physically explore how the interface would look and feel.

Video Description: A person drinks from a straw in front of the Sip n’ Sense prototype. As the water level goes down, the arrow moves to the corresponding color and a sound is played.

See

The blue LED lights up when the cup is full. As the water level drops, the yellow LED turns on to signal that the cup is almost empty. When the cup is completely empty, the red LED illuminates to indicate it’s time to refill.

Hear

No sound plays when the cup is full. As the cup nears empty, the speaker emits a pulsing tone at steady intervals. Once the cup is empty, a continuous tone plays to alert the user to fill their cup.

Touch

As the water level decreases, the tactile gauge meter can be felt shifting gradually from the blue region (full) to the red region (empty), allowing users to sense the change through movement and texture.

Professors Georgina Kleege (UC Berkeley) and Sugandha Gupta (Parsons), both with low vision, attended our final presentation and engaged with our prototype.

Video Description: Professor Kleege takes a sip through the straw while Professor Gupta explores the tactile gauge, feeling the needle move in response to changing water levels.

Video Description: Professor Suganda Gupta also takes a sip through the straw as Professor Kleege explores the prototype.

Thanks to my amazing teammates who helped me bring this project to life during a fast-paced 7-week sprint. And a BIG thank you to Professor Lauren Race for being an inspiration.

This experience challenged me to think about what inclusive design really means. I learned to think beyond visuals and explore how sound, touch, and motion can create richer, more inclusive interactions.